- 1. Learning with a teacher

- 2. Learning without a teacher

- 3. Training with partial involvement of the teacher

- 4. Reinforcement learning

- 5. Most commonly used machine learning algorithms

- 6. Linear regression and linear classifier

- 7. Logistic regression

- 8. Decision Trees

- 9. k-means method

- 10. Principal component analysis

- 11. Neural networks

- 12. Conclusion

- 13. Recommended reading

Many articles about [machine learning] algorithms (https://ru.wikipedia.org/wiki/Machine_learning) provide great definitions - but they don't make it easy to choose which algorithm you should use. Read this article!

When I started my data science journey, I often faced the problem of choosing the most suitable algorithm for my particular task. If you're like me, when you open an article about machine learning algorithms, you see hundreds of detailed descriptions. The paradox is that it doesn't make it easier to choose which one to use.

In this article for Statsbot I will try to explain the basic concepts and give some intuition for using different kinds of machine learning algorithms for different tasks. At the end of the article, you will find a structured overview of the main features of the described algorithms.

First of all, four types of machine learning tasks should be distinguished:

- Supervised learning

- Learning without a teacher

- [Partially Involved Learning]

- Reinforcement training

Learning with a teacher

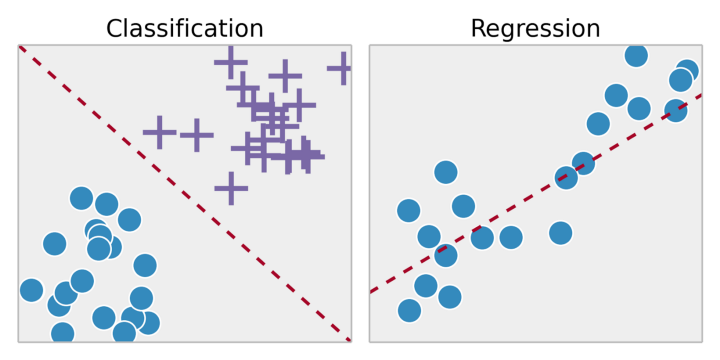

Supervised learning is the task of training a system on a training dataset. By fitting the training results to the training dataset, we want to find the most optimal model parameters for predicting possible responses on other objects (test datasets). If the set of possible answers is a real number, then this is a regression problem. If the set of possible answers has a limited number of values, where these values are unordered, then this is a classification problem.

Learning without a teacher

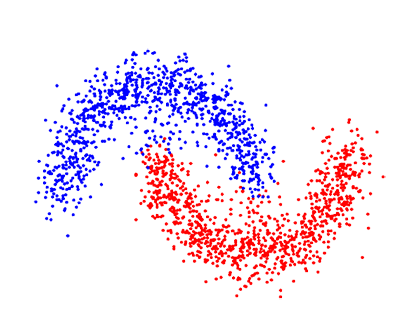

In unsupervised learning, we have less information about objects. In particular, the training dataset does not have labeled data belonging to a particular class of predefined data. What is our goal now? It is possible to observe some similarity between groups of objects and include them in the corresponding clusters. Some objects may be very different from all clusters, and thus we assume that these objects are anomalies.

Training with partial involvement of the teacher

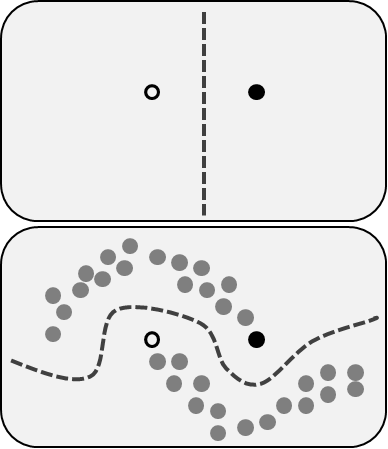

Partially supervised learning involves both of the problems we described earlier: they use labeled, predefined and non-predefined, unlabeled data. This is a great opportunity for those who cannot redefine, label their data. This method allows for a significant increase in accuracy as we can use undefined data in the training dataset with a small amount of labeled predefined data.

Reinforcement learning

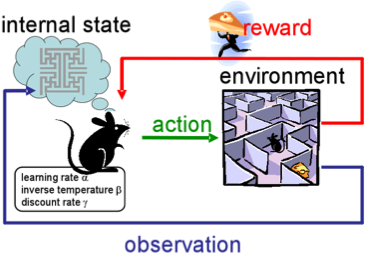

Reinforcement learning is unlike any of our previous tasks because here we have neither predefined labeled data nor unlabeled datasets. Reinforcement learning is a field of machine learning concerned with how software agents should take actions in some environment in order to maximize some notion of cumulative reward.

Imagine that you are a robot in some strange place. You can perform actions and receive rewards from the environment for them. After each action, your behavior becomes more complex and intelligent, so you train yourself to behave in the most efficient way at each step. In biology, this is called adaptation to the natural environment.

Most commonly used machine learning algorithms

Now that we are familiar with the types of machine learning problems, let's look at the most popular algorithms with their application in real life.

Linear regression and linear classifier

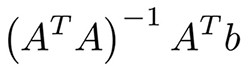

These are probably the simplest machine learning algorithms. You have features x1, ... xn objects (matrix A) and labels (vector b). Your goal is to find the most optimal weights w1, ... wn and bias for these features according to some loss function; for example, rms error or mean absolute error for a regression problem. In the case of root mean square error, there is a mathematical equation from the least squares method:

In practice, it's easier to optimize it with gradient descent, which is much more computationally efficient. Despite the simplicity of this algorithm, it works very well when you have thousands of functions; for example, a set of words or n-grams in text analysis. More complex algorithms suffer from reassignment of many features rather than huge datasets, while linear regression provides decent quality.

To prevent overfitting, we often use regularization methods such as lasso and comb. The idea is to add the sum of the moduli of the weights and the sum of the squares of the weights, respectively, to our loss function. Read the big tutorial on these algorithms at the end of the article.

Logistic regression

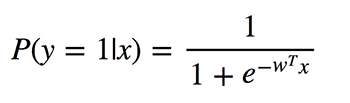

Do not confuse these classification algorithms with regression methods due to the use of "regression" in the name. Logistic regression performs a binary classification, so the labeled outputs are binary. Let's define P(y = 1 | x) as the conditional probability that the output y is 1 given that the input vector function x is given. The w coefficients are the weights the model wants to learn.

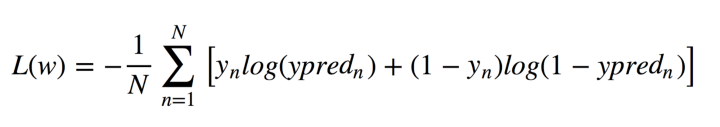

Since this algorithm calculates the probability of belonging to each class, you must take into account how much the probability differs from 0 or 1 and average it over all objects, as you did with linear regression. Such a loss function is the average of the cross entropy:

Do not panic! I will make it easy for you. Let y have the correct answers: 0 or 1, y_pred are the predicted answers. If y is 0, then the first term in the sum is 0, and the second term is less than we predicted y_pred to 0 according to the properties of the logarithm. Similarly, in the case where y is equal to 1.

What's good about logistic regression? It takes a linear combination of features and applies a non-linear (sigmoid) function to it, so it's a very small neural network instance!

Decision Trees

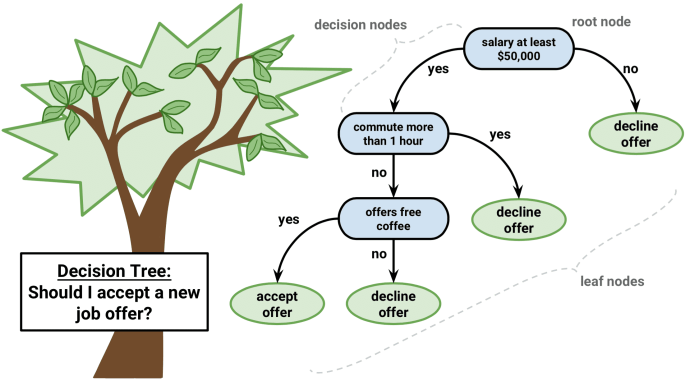

Another popular and easy to understand algorithm is decision trees. Their graphics will help you understand what you're thinking, and their engine requires a systematic, documented thought process.

The idea of this algorithm is quite simple. At each node, we choose the best option between all features and all possible split points. Each option is chosen in such a way as to maximize some functionality. In classification trees, we use cross entropy and the Gini index. In regression trees, we minimize the sum of the squared error between the predictor variable of the target values of the points falling within this region and the one we assign to it.

We do this procedure recursively for each node and end when we meet the stopping criteria. They can range from the minimum number of leaves per node to the height of the tree. Individual trees are used very rarely, but in composition with many others, they create very efficient algorithms, such as random forest or gradient growth.

k-means method

Sometimes, you don't know anything about properties, and your goal is to assign properties according to the features of the objects. This is called the clustering problem.

Suppose you want to divide all data objects into k clusters. You need to choose random k points from your data and call them cluster centers. Clusters of other objects are defined by the nearest cluster center. Then the cluster centers are transformed and the process is repeated until convergence.

This is the clearest clustering technique, but it still has some drawbacks. First of all, you must know a number of clusters that we cannot know. Secondly, the result depends on the points randomly chosen at the beginning, and the algorithm does not guarantee that we will reach the global minimum of the functional.

There are a number of clustering methods with various advantages and disadvantages that you could explore in the recommended reading.

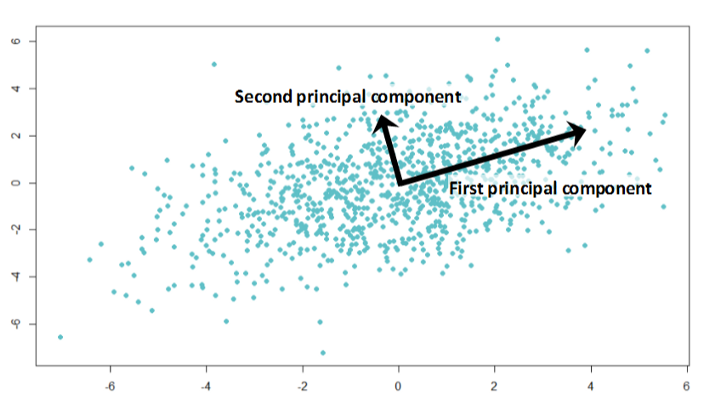

Principal component analysis

Have you ever studied for a difficult exam at night or even in the morning right before it starts? It is not possible to remember all the information you need, but you want to maximize the information you can remember in the time available; for example, first study the theorems that appear in many exams, etc.

Principal component analysis is based on the same idea. This algorithm provides dimensionality reduction. Sometimes you have a wide range of features that are likely highly correlated, and models can easily overwhelm huge amounts of data. Then you can apply this algorithm.

Surprisingly, these vectors are eigenvectors of the feature correlation matrix from the dataset.

Now the algorithm is clear:

- We calculate the correlation matrix of feature columns and find the eigenvectors of this matrix.

- We take these multidimensional vectors and calculate the projection of all features onto them.

The new functions are coordinates from the projection, and their number depends on the number of eigenvectors on which you calculate the projection.

Neural networks

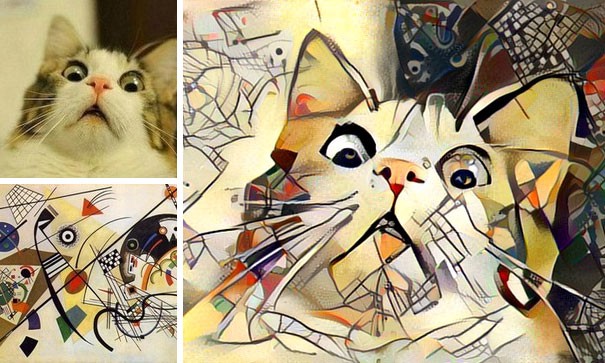

I already mentioned neural networks when we talked about logistic regression. There are many different architectures that are valuable in specific applications. Most often it is a range of layers or components with linear connections between them and the following non-linearities.

If you are working with images, Convolutional Deep Neural Networks are great. Nonlinearities are represented by convolutional and pooling layers capable of capturing the characteristic features of images.

To work with texts and sequences, you'd better choose recurrent neural networks (eng. Recurrent neural network ; RNN ). RNNs contain modules of long short-term memory or controlled recurrent neurons and can work with data for which we know the size in advance. One of the most well-known applications of RNN is machine translation.

Conclusion

I hope that you now understand the general concepts of the most used machine learning algorithms and have an intuition on how to choose one of them for your particular problem. To make things easier for you, I have prepared a structured overview of their main features:

- Linear Regression and Linear Classifier : Despite their apparent simplicity, they are very useful for a huge number of functions, where the best algorithms suffer from overfitting.

- Logistic regression : the simplest non-linear classifier with a linear combination of parameters and a non-linear function (sigmoid) for binary classification.

- Decision Trees : Often similar to human decision making and easy to interpret, but most commonly used in compositions such as random forest or gradient growth.

- K-Means : A more primitive but very easy to understand algorithm that can be ideal as a base for a lot of problems.

- Principal Component Analysis : An excellent choice for reducing the dimensionality of your space with minimal loss of information.

- Neural networks : a new era of machine learning algorithms that can be applied to many problems, but their training requires enormous computational complexity.

Recommended reading

- Overview-of-clustering-methods

- The Complete Tutorial on Ridge and Lasso Regression in Python

- Youtube channel about AI for beginners with great tutorials and examples

Article written by: Daniil Korbut | October 27, 2017